PREREQUISITES

Make sure you've read Rendering Concepts first. This page builds on those concepts and discusses how to render objects in the world.

This page explores some more modern rendering concepts. You'll learn more about the two split phases of rendering: "extraction" (or "preparation") and "drawing" (or "rendering"). In this guide, we will refer to the "extraction/preparation" phase as the "extraction" phase and the "drawing/rendering" phase as the "drawing" phase.

To render custom objects in the world, you have two choices. You can inject into existing vanilla rendering and add your code, but that limits you to existing vanilla render pipelines. If existing vanilla render pipelines don't suit your needs, you need a custom render pipeline.

Before we get into custom render pipelines, let's look at vanilla rendering.

The Extraction and Drawing Phases

As mentioned in Rendering Concepts, recent Minecraft updates are working on splitting rendering into two phases: "extraction" and "drawing".

All data needed for rendering is collected during the "extraction" phase. This includes, for example, writing to the buffered builder. Writing vertices to the buffered builder via buffer.addVertex is part of the "extraction" phase. Note that even though many methods are prefixed with draw or render, they should be called during the "extraction" phase. You should add all elements you want to render during this phase.

When the "extraction" phase is done, the "drawing" phase starts, and the buffered builder is built. During this phase, the buffered builder is drawn to the screen. The ultimate goal of this "extraction" and "drawing" split is to allow for drawing the previous frame in parallel to extracting the next frame, improving performance.

Now, with these two phases in mind, let's look at how to create a custom render pipeline.

Custom Render Pipelines

Let's say we want to render waypoints, which should appear through walls. The closest vanilla pipeline for that would be RenderPipelines#DEBUG_FILLED_BOX, but it doesn't render through walls, so we will need a custom render pipeline.

Defining a Custom Render Pipeline

We define a custom render pipeline in a class:

java

private static final RenderPipeline FILLED_THROUGH_WALLS = RenderPipelines.register(RenderPipeline.builder(RenderPipelines.DEBUG_FILLED_SNIPPET)

.withLocation(Identifier.fromNamespaceAndPath(ExampleMod.MOD_ID, "pipeline/debug_filled_box_through_walls"))

.withDepthTestFunction(DepthTestFunction.NO_DEPTH_TEST)

.build()

);1

2

3

4

5

2

3

4

5

Extraction Phase

We first implement the "extraction" phase. We can call this method during the "extraction" phase to add a waypoint to be rendered.

java

private static final ByteBufferBuilder allocator = new ByteBufferBuilder(RenderType.SMALL_BUFFER_SIZE);

private BufferBuilder buffer;

private void renderWaypoint(WorldRenderContext context) {

PoseStack matrices = context.matrices();

Vec3 camera = context.worldState().cameraRenderState.pos;

matrices.pushPose();

matrices.translate(-camera.x, -camera.y, -camera.z);

if (buffer == null) {

buffer = new BufferBuilder(allocator, FILLED_THROUGH_WALLS.getVertexFormatMode(), FILLED_THROUGH_WALLS.getVertexFormat());

}

renderFilledBox(matrices.last().pose(), buffer, 0f, 100f, 0f, 1f, 101f, 1f, 0f, 1f, 0f, 0.5f);

matrices.popPose();

}

private void renderFilledBox(Matrix4fc positionMatrix, BufferBuilder buffer, float minX, float minY, float minZ, float maxX, float maxY, float maxZ, float red, float green, float blue, float alpha) {

// Front Face

buffer.addVertex(positionMatrix, minX, minY, maxZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, minY, maxZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, maxY, maxZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, minX, maxY, maxZ).setColor(red, green, blue, alpha);

// Back face

buffer.addVertex(positionMatrix, maxX, minY, minZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, minX, minY, minZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, minX, maxY, minZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, maxY, minZ).setColor(red, green, blue, alpha);

// Left face

buffer.addVertex(positionMatrix, minX, minY, minZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, minX, minY, maxZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, minX, maxY, maxZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, minX, maxY, minZ).setColor(red, green, blue, alpha);

// Right face

buffer.addVertex(positionMatrix, maxX, minY, maxZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, minY, minZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, maxY, minZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, maxY, maxZ).setColor(red, green, blue, alpha);

// Top face

buffer.addVertex(positionMatrix, minX, maxY, maxZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, maxY, maxZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, maxY, minZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, minX, maxY, minZ).setColor(red, green, blue, alpha);

// Bottom face

buffer.addVertex(positionMatrix, minX, minY, minZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, minY, minZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, minY, maxZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, minX, minY, maxZ).setColor(red, green, blue, alpha);

}1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

Note that the size used in the BufferAllocator constructor depends on the render pipeline you are using. In our case, it is RenderType.SMALL_BUFFER_SIZE.

If you want to render multiple waypoints, call this method multiple times. Make sure you do so during the "extraction" phase, BEFORE the "drawing" phase starts, at which point the buffer builder is built.

Render States

Note that in the above code we are saving the BufferBuilder in a field. This is because we need it in the "drawing" phase. In this case, the BufferBuilder is our "render state" or "extracted data". If you need additional data during the "drawing" phase, you should create a custom render state class to hold the BufferedBuilder and any additional rendering data you need.

Drawing Phase

Now we'll implement the "drawing" phase. This should be called after all waypoints you want to render have been added to the BufferBuilder during the "extraction" phase.

java

private static final Vector4f COLOR_MODULATOR = new Vector4f(1f, 1f, 1f, 1f);

private static final Vector3f MODEL_OFFSET = new Vector3f();

private static final Matrix4f TEXTURE_MATRIX = new Matrix4f();

private MappableRingBuffer vertexBuffer;

private void drawFilledThroughWalls(Minecraft client, @SuppressWarnings("SameParameterValue") RenderPipeline pipeline) {

// Build the buffer

MeshData builtBuffer = buffer.buildOrThrow();

MeshData.DrawState drawParameters = builtBuffer.drawState();

VertexFormat format = drawParameters.format();

GpuBuffer vertices = upload(drawParameters, format, builtBuffer);

draw(client, pipeline, builtBuffer, drawParameters, vertices, format);

// Rotate the vertex buffer so we are less likely to use buffers that the GPU is using

vertexBuffer.rotate();

buffer = null;

}

private GpuBuffer upload(MeshData.DrawState drawParameters, VertexFormat format, MeshData builtBuffer) {

// Calculate the size needed for the vertex buffer

int vertexBufferSize = drawParameters.vertexCount() * format.getVertexSize();

// Initialize or resize the vertex buffer as needed

if (vertexBuffer == null || vertexBuffer.size() < vertexBufferSize) {

if (vertexBuffer != null) {

vertexBuffer.close();

}

vertexBuffer = new MappableRingBuffer(() -> ExampleMod.MOD_ID + " example render pipeline", GpuBuffer.USAGE_VERTEX | GpuBuffer.USAGE_MAP_WRITE, vertexBufferSize);

}

// Copy vertex data into the vertex buffer

CommandEncoder commandEncoder = RenderSystem.getDevice().createCommandEncoder();

try (GpuBuffer.MappedView mappedView = commandEncoder.mapBuffer(vertexBuffer.currentBuffer().slice(0, builtBuffer.vertexBuffer().remaining()), false, true)) {

MemoryUtil.memCopy(builtBuffer.vertexBuffer(), mappedView.data());

}

return vertexBuffer.currentBuffer();

}

private static void draw(Minecraft client, RenderPipeline pipeline, MeshData builtBuffer, MeshData.DrawState drawParameters, GpuBuffer vertices, VertexFormat format) {

GpuBuffer indices;

VertexFormat.IndexType indexType;

if (pipeline.getVertexFormatMode() == VertexFormat.Mode.QUADS) {

// Sort the quads if there is translucency

builtBuffer.sortQuads(allocator, RenderSystem.getProjectionType().vertexSorting());

// Upload the index buffer

indices = pipeline.getVertexFormat().uploadImmediateIndexBuffer(builtBuffer.indexBuffer());

indexType = builtBuffer.drawState().indexType();

} else {

// Use the general shape index buffer for non-quad draw modes

RenderSystem.AutoStorageIndexBuffer shapeIndexBuffer = RenderSystem.getSequentialBuffer(pipeline.getVertexFormatMode());

indices = shapeIndexBuffer.getBuffer(drawParameters.indexCount());

indexType = shapeIndexBuffer.type();

}

// Actually execute the draw

GpuBufferSlice dynamicTransforms = RenderSystem.getDynamicUniforms()

.writeTransform(RenderSystem.getModelViewMatrix(), COLOR_MODULATOR, MODEL_OFFSET, TEXTURE_MATRIX);

try (RenderPass renderPass = RenderSystem.getDevice()

.createCommandEncoder()

.createRenderPass(() -> ExampleMod.MOD_ID + " example render pipeline rendering", client.getMainRenderTarget().getColorTextureView(), OptionalInt.empty(), client.getMainRenderTarget().getDepthTextureView(), OptionalDouble.empty())) {

renderPass.setPipeline(pipeline);

RenderSystem.bindDefaultUniforms(renderPass);

renderPass.setUniform("DynamicTransforms", dynamicTransforms);

// Bind texture if applicable:

// Sampler0 is used for texture inputs in vertices

// renderPass.bindTexture("Sampler0", textureSetup.texure0(), textureSetup.sampler0());

renderPass.setVertexBuffer(0, vertices);

renderPass.setIndexBuffer(indices, indexType);

// The base vertex is the starting index when we copied the data into the vertex buffer divided by vertex size

//noinspection ConstantValue

renderPass.drawIndexed(0 / format.getVertexSize(), 0, drawParameters.indexCount(), 1);

}

builtBuffer.close();

}1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

Cleaning up

Finally, we need to clean up resources when the game renderer is closed. GameRenderer#close should call this method, and for that you currently need to inject into GameRenderer#close with a mixin.

java

public void close() {

allocator.close();

if (vertexBuffer != null) {

vertexBuffer.close();

vertexBuffer = null;

}

}1

2

3

4

5

6

7

8

2

3

4

5

6

7

8

java

package com.example.docs.mixin.client;

import org.spongepowered.asm.mixin.Mixin;

import org.spongepowered.asm.mixin.injection.At;

import org.spongepowered.asm.mixin.injection.Inject;

import org.spongepowered.asm.mixin.injection.callback.CallbackInfo;

import net.minecraft.client.renderer.GameRenderer;

import com.example.docs.rendering.CustomRenderPipeline;

@Mixin(GameRenderer.class)

public class GameRendererMixin {

@Inject(method = "close", at = @At("RETURN"))

private void onGameRendererClose(CallbackInfo ci) {

CustomRenderPipeline.getInstance().close();

}

}1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

Final Code

Combining all the steps from above, we get a simple class that renders a waypoint at (0, 100, 0) through walls.

java

package com.example.docs.rendering;

import java.util.OptionalDouble;

import java.util.OptionalInt;

import com.mojang.blaze3d.buffers.GpuBuffer;

import com.mojang.blaze3d.buffers.GpuBufferSlice;

import com.mojang.blaze3d.pipeline.RenderPipeline;

import com.mojang.blaze3d.platform.DepthTestFunction;

import com.mojang.blaze3d.systems.CommandEncoder;

import com.mojang.blaze3d.systems.RenderPass;

import com.mojang.blaze3d.systems.RenderSystem;

import com.mojang.blaze3d.vertex.BufferBuilder;

import com.mojang.blaze3d.vertex.ByteBufferBuilder;

import com.mojang.blaze3d.vertex.MeshData;

import com.mojang.blaze3d.vertex.PoseStack;

import com.mojang.blaze3d.vertex.VertexFormat;

import org.joml.Matrix4f;

import org.joml.Matrix4fc;

import org.joml.Vector3f;

import org.joml.Vector4f;

import org.lwjgl.system.MemoryUtil;

import net.minecraft.client.Minecraft;

import net.minecraft.client.renderer.MappableRingBuffer;

import net.minecraft.client.renderer.RenderPipelines;

import net.minecraft.client.renderer.rendertype.RenderType;

import net.minecraft.resources.Identifier;

import net.minecraft.world.phys.Vec3;

import net.fabricmc.api.ClientModInitializer;

import net.fabricmc.fabric.api.client.rendering.v1.world.WorldRenderContext;

import net.fabricmc.fabric.api.client.rendering.v1.world.WorldRenderEvents;

import com.example.docs.ExampleMod;

public class CustomRenderPipeline implements ClientModInitializer {

private static CustomRenderPipeline instance;

// :::custom-pipelines:define-pipeline

private static final RenderPipeline FILLED_THROUGH_WALLS = RenderPipelines.register(RenderPipeline.builder(RenderPipelines.DEBUG_FILLED_SNIPPET)

.withLocation(Identifier.fromNamespaceAndPath(ExampleMod.MOD_ID, "pipeline/debug_filled_box_through_walls"))

.withDepthTestFunction(DepthTestFunction.NO_DEPTH_TEST)

.build()

);

// :::custom-pipelines:define-pipeline

// :::custom-pipelines:extraction-phase

private static final ByteBufferBuilder allocator = new ByteBufferBuilder(RenderType.SMALL_BUFFER_SIZE);

private BufferBuilder buffer;

// :::custom-pipelines:extraction-phase

// :::custom-pipelines:drawing-phase

private static final Vector4f COLOR_MODULATOR = new Vector4f(1f, 1f, 1f, 1f);

private static final Vector3f MODEL_OFFSET = new Vector3f();

private static final Matrix4f TEXTURE_MATRIX = new Matrix4f();

private MappableRingBuffer vertexBuffer;

// :::custom-pipelines:drawing-phase

public static CustomRenderPipeline getInstance() {

return instance;

}

@Override

public void onInitializeClient() {

instance = this;

WorldRenderEvents.BEFORE_TRANSLUCENT.register(this::extractAndDrawWaypoint);

}

private void extractAndDrawWaypoint(WorldRenderContext context) {

renderWaypoint(context);

drawFilledThroughWalls(Minecraft.getInstance(), FILLED_THROUGH_WALLS);

}

// :::custom-pipelines:extraction-phase

private void renderWaypoint(WorldRenderContext context) {

PoseStack matrices = context.matrices();

Vec3 camera = context.worldState().cameraRenderState.pos;

matrices.pushPose();

matrices.translate(-camera.x, -camera.y, -camera.z);

if (buffer == null) {

buffer = new BufferBuilder(allocator, FILLED_THROUGH_WALLS.getVertexFormatMode(), FILLED_THROUGH_WALLS.getVertexFormat());

}

renderFilledBox(matrices.last().pose(), buffer, 0f, 100f, 0f, 1f, 101f, 1f, 0f, 1f, 0f, 0.5f);

matrices.popPose();

}

private void renderFilledBox(Matrix4fc positionMatrix, BufferBuilder buffer, float minX, float minY, float minZ, float maxX, float maxY, float maxZ, float red, float green, float blue, float alpha) {

// Front Face

buffer.addVertex(positionMatrix, minX, minY, maxZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, minY, maxZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, maxY, maxZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, minX, maxY, maxZ).setColor(red, green, blue, alpha);

// Back face

buffer.addVertex(positionMatrix, maxX, minY, minZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, minX, minY, minZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, minX, maxY, minZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, maxY, minZ).setColor(red, green, blue, alpha);

// Left face

buffer.addVertex(positionMatrix, minX, minY, minZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, minX, minY, maxZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, minX, maxY, maxZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, minX, maxY, minZ).setColor(red, green, blue, alpha);

// Right face

buffer.addVertex(positionMatrix, maxX, minY, maxZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, minY, minZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, maxY, minZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, maxY, maxZ).setColor(red, green, blue, alpha);

// Top face

buffer.addVertex(positionMatrix, minX, maxY, maxZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, maxY, maxZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, maxY, minZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, minX, maxY, minZ).setColor(red, green, blue, alpha);

// Bottom face

buffer.addVertex(positionMatrix, minX, minY, minZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, minY, minZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, maxX, minY, maxZ).setColor(red, green, blue, alpha);

buffer.addVertex(positionMatrix, minX, minY, maxZ).setColor(red, green, blue, alpha);

}

// :::custom-pipelines:extraction-phase

// :::custom-pipelines:drawing-phase

private void drawFilledThroughWalls(Minecraft client, @SuppressWarnings("SameParameterValue") RenderPipeline pipeline) {

// Build the buffer

MeshData builtBuffer = buffer.buildOrThrow();

MeshData.DrawState drawParameters = builtBuffer.drawState();

VertexFormat format = drawParameters.format();

GpuBuffer vertices = upload(drawParameters, format, builtBuffer);

draw(client, pipeline, builtBuffer, drawParameters, vertices, format);

// Rotate the vertex buffer so we are less likely to use buffers that the GPU is using

vertexBuffer.rotate();

buffer = null;

}

private GpuBuffer upload(MeshData.DrawState drawParameters, VertexFormat format, MeshData builtBuffer) {

// Calculate the size needed for the vertex buffer

int vertexBufferSize = drawParameters.vertexCount() * format.getVertexSize();

// Initialize or resize the vertex buffer as needed

if (vertexBuffer == null || vertexBuffer.size() < vertexBufferSize) {

if (vertexBuffer != null) {

vertexBuffer.close();

}

vertexBuffer = new MappableRingBuffer(() -> ExampleMod.MOD_ID + " example render pipeline", GpuBuffer.USAGE_VERTEX | GpuBuffer.USAGE_MAP_WRITE, vertexBufferSize);

}

// Copy vertex data into the vertex buffer

CommandEncoder commandEncoder = RenderSystem.getDevice().createCommandEncoder();

try (GpuBuffer.MappedView mappedView = commandEncoder.mapBuffer(vertexBuffer.currentBuffer().slice(0, builtBuffer.vertexBuffer().remaining()), false, true)) {

MemoryUtil.memCopy(builtBuffer.vertexBuffer(), mappedView.data());

}

return vertexBuffer.currentBuffer();

}

private static void draw(Minecraft client, RenderPipeline pipeline, MeshData builtBuffer, MeshData.DrawState drawParameters, GpuBuffer vertices, VertexFormat format) {

GpuBuffer indices;

VertexFormat.IndexType indexType;

if (pipeline.getVertexFormatMode() == VertexFormat.Mode.QUADS) {

// Sort the quads if there is translucency

builtBuffer.sortQuads(allocator, RenderSystem.getProjectionType().vertexSorting());

// Upload the index buffer

indices = pipeline.getVertexFormat().uploadImmediateIndexBuffer(builtBuffer.indexBuffer());

indexType = builtBuffer.drawState().indexType();

} else {

// Use the general shape index buffer for non-quad draw modes

RenderSystem.AutoStorageIndexBuffer shapeIndexBuffer = RenderSystem.getSequentialBuffer(pipeline.getVertexFormatMode());

indices = shapeIndexBuffer.getBuffer(drawParameters.indexCount());

indexType = shapeIndexBuffer.type();

}

// Actually execute the draw

GpuBufferSlice dynamicTransforms = RenderSystem.getDynamicUniforms()

.writeTransform(RenderSystem.getModelViewMatrix(), COLOR_MODULATOR, MODEL_OFFSET, TEXTURE_MATRIX);

try (RenderPass renderPass = RenderSystem.getDevice()

.createCommandEncoder()

.createRenderPass(() -> ExampleMod.MOD_ID + " example render pipeline rendering", client.getMainRenderTarget().getColorTextureView(), OptionalInt.empty(), client.getMainRenderTarget().getDepthTextureView(), OptionalDouble.empty())) {

renderPass.setPipeline(pipeline);

RenderSystem.bindDefaultUniforms(renderPass);

renderPass.setUniform("DynamicTransforms", dynamicTransforms);

// Bind texture if applicable:

// Sampler0 is used for texture inputs in vertices

// renderPass.bindTexture("Sampler0", textureSetup.texure0(), textureSetup.sampler0());

renderPass.setVertexBuffer(0, vertices);

renderPass.setIndexBuffer(indices, indexType);

// The base vertex is the starting index when we copied the data into the vertex buffer divided by vertex size

//noinspection ConstantValue

renderPass.drawIndexed(0 / format.getVertexSize(), 0, drawParameters.indexCount(), 1);

}

builtBuffer.close();

}

// :::custom-pipelines:drawing-phase

// :::custom-pipelines:clean-up

public void close() {

allocator.close();

if (vertexBuffer != null) {

vertexBuffer.close();

vertexBuffer = null;

}

}

// :::custom-pipelines:clean-up

}1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

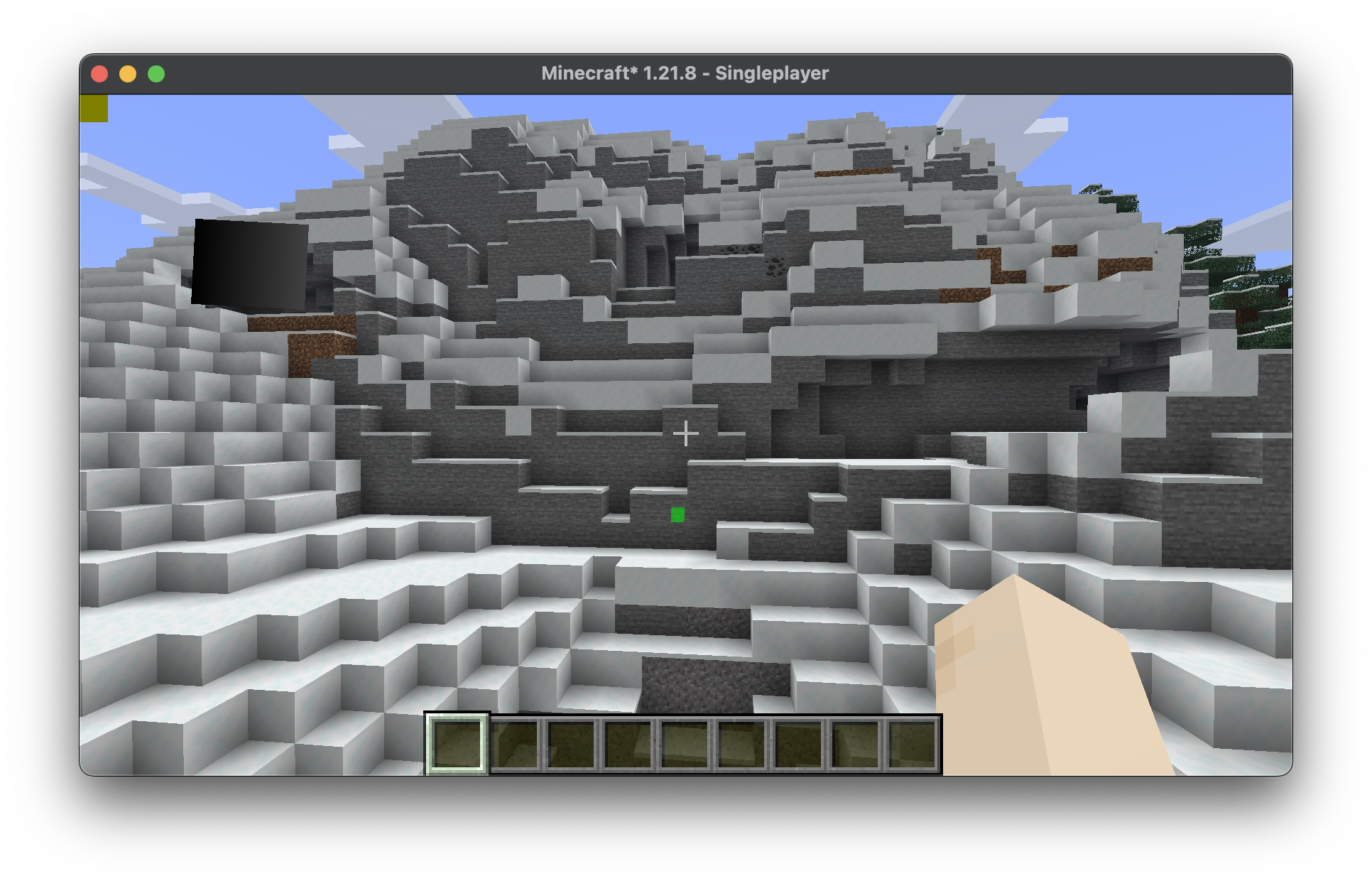

Don't forget the GameRendererMixin as well! Here is the result: